Cory Clark is Director of the Adversarial Collaboration Project at University of Pennsylvania and a Visiting Scholar in the Psychology department. She studies political biases (on both the left and right) and how moral and political concerns influence evaluations of science

A tempting intuition is that human reasoning works in precisely the way that we feel it does. We are exposed to certain facts and information; we think about them in a cool, rational manner; and then we decide whether and how to assimilate that new information to form more empirically accurate beliefs.

However, human cognition (like all animal cognition) evolved to promote fitness – to increase the odds of reproducing high quantity and quality offspring. Although in many cases, holding accurate beliefs can promote fitness (eg, correctly distinguishing between nutritious food and toxic substances), humans face other challenges as well. Social acceptance and social status are critical for human fitness, and so humans evolved to reason in ways that help them attain and maintain social status and belonging.

For humans, social ostracisation can be extremely costly – it can eliminate one’s ability to secure a high-quality mate and threaten one’s physical well-being and survival. Consider, for example, intragroup religious similarity and intergroup religious differences.

Throughout much of human history (and even in present day), one could be persecuted, exiled, or killed for holding the wrong religious beliefs. And so people evolved to process and assimilate information to conform to important local beliefs.

Similarly, social status can be extremely beneficial – it can increase one’s ability to secure high quality mates and other resources. One of the best ways to attain social status is to provide benefits to other group members, and so humans evolved to signal loyalty and value to their social groups by being fierce defenders of the group’s interests.

In recent decades, secular tribal identities have risen to prominence in many advanced Western societies. Political ideology is now a primary source of ingroup identity and intergroup conflict, which makes political preferences and beliefs particularly vulnerable to tribal cognition.

Political ideology is now a primary source of ingroup identity and intergroup conflict, which makes political preferences and beliefs particularly vulnerable to tribal cognition

Political tribal cognition mainly works in two ways. First, people selectively pursue information that supports their groups’ beliefs and avoid information that challenges their groups beliefs (eg, by exposing themselves to a biased set of media outlets). And second, once people are exposed to information, they are highly credulous and accepting toward congenial information and excessively sceptical and critical of uncongenial information.

In the laboratory, political tribal cognition has been demonstrated dozens of times. For example, people evaluated the exact same policies as more effective when they were proposed by ingroup politicians than when they were proposed by outgroup politicians. And people evaluated the exact same scientific methods as higher quality when they supported rather than challenged their own political beliefs. Although scientific evidence may be the ideal basis for forming effective policy, our evaluations of evidence quality are biased such that we evaluate evidence as higher quality when it concords with our political groups’ beliefs.

Although scientific evidence may be the ideal basis for forming effective policy, our evaluations of evidence quality are biased such that we evaluate evidence as higher quality when it concords with our political groups’ beliefs

A recent meta-analysis, which summarised the findings of over 50 political bias studies, found that both liberals and conservatives are politically biased and to virtually identical degrees. Although scholarship often paints an unflattering portrait of political conservatives, it seems liberals are similarly prone to allowing their political identities to distort their reasoning.

However, liberals and conservatives have slightly different biases. Biases tend to reveal themselves for topics, values, and policy preferences that are highly central to a group’s identity. Generally, whichever group cares more about a particular issue – whichever group has stronger attachment to their preferred narrative – will demonstrate stronger biases.

Liberals are particularly prone to biases when evaluating information with significance to relatively lower status groups

Liberals are particularly prone to biases when evaluating information with significance to relatively lower status groups (eg, women, ethnic and racial minorities). Liberals, on average, are more egalitarian than conservatives and have stronger desires to protect relatively low status groups.

Consequently, they demonstrate biases against any information that portrays low status groups unfavourably (eg, as less skilled at maths, less proficient leaders, less intelligent, or more violent) compared to high status groups, whereas conservatives treat such information more similarly.

For example, across a couple of sets of studies, people evaluated science on sex differences more favourably when women were portrayed more positively than men (eg, as better drawers, less prone to lying, and more intelligent) than when men were portrayed more positively than women. And these tendencies were stronger as participants were more politically left-wing.

In an ongoing project, psychology professors reported that they likely would be punished or ostracised by their peers if they reported data that portrayed women or racial minorities in an unflattering way or explained group differences (ie, the underrepresentation or underperformance of certain groups) with any explanation other than discrimination.

Many also asserted a high degree of confidence that discrimination is not the only cause of group disparities, and those who did so were more likely to report self-censoring their own views.

This bias among liberal social scientists likely explains – at least in part – various false positive findings and failed interventions. For example, the existence of implicit racial bias, which conforms to liberals’ preferences for discriminatory explanations for group differences, was once thought to explain disparate outcomes in various domains (eg, educational and career outcomes).

However, recent meta-analyses of this literature reveal that implicit bias may be virtually unrelated to discriminatory behaviour. And despite serious efforts to create implicit bias trainings and interventions, there is little to no evidence that these have had or will have any positive effect. Cases of unreliable research like this plague the social sciences.

These false positives and expensive failed interventions (and their apparent basis in left-wing political bias) have caused mistrust of social scientists and higher education broadly. Moreover, a growing body of research has uncovered discrimination in academia against conservative scholars, further elucidating reasons for the stark political imbalance in the social sciences.

Politically homogenous communities not only deter original thinkers from entering those communities but intimidate those within the community who might occasionally disagree

Politically homogenous communities not only deter original thinkers from entering those communities but intimidate those within the community who might occasionally disagree. This can create false consensuses, the elevation of expedient but empirically inaccurate ideas, and the stifling of better ones.

Over the past several years, most organisations have become increasingly politically left-wing. This is not a problem in principle, but the ideological homogeneity of such groups can create environments that encourage groupthink, foster systematic biases, and suppress dissent. Left unchecked, politically homogenous communities can become engines for false narratives that create inefficient and unreliable bases for effective policy.

Just as experts can be biased in their research, manipulating methods and analyses to confirm their preferred hypotheses, organisations and policymakers can be biased in their recruitment of research and expertise, selectively attending to (and ignoring) research to support their political agenda or bottom line.

Organisations frequently apply scientific findings to policymaking prematurely on unjustified assumptions about generalisations from laboratory studies to real-life situations. Moreover, organisations and the broader public can tend to falsely equate scientific support with truth and certainty. In reality, when Scientific Report A says X, there is often Scientific Report B saying Y. People and organisations can then choose to believe whichever report is more convenient.

Most organisations are liberal (or answer to liberals) and so their biases likely resemble those of liberal scholars

Most organisations are liberal (or answer to liberals) and so their biases likely resemble those of liberal scholars (and likely include discrimination against conservatives and conservative perspectives). To minimise these concerns in organisational decisionmaking, organisations might consider the reforms that science is seeking to adopt.

First, organisations can increase accountability by promoting transparency in all decisionmaking. Second, they can adopt an adversarial collaboration approach: solving problems by engaging both advocates and dissenters of particular perspectives. This could mean engaging adversaries directly, funding research that takes an adversarial collaboration approach, or consulting diverse expertise.

First, organisations can increase accountability by promoting transparency in all decisionmaking. Second, they can adopt an adversarial collaboration approach

Any human-guided endeavour that addresses social issues will be vulnerable to biases that can steer people away from accuracy. Humans conceive questions and problems; humans collect data to address them; humans disseminate findings to other humans; and humans apply those findings to create social change.

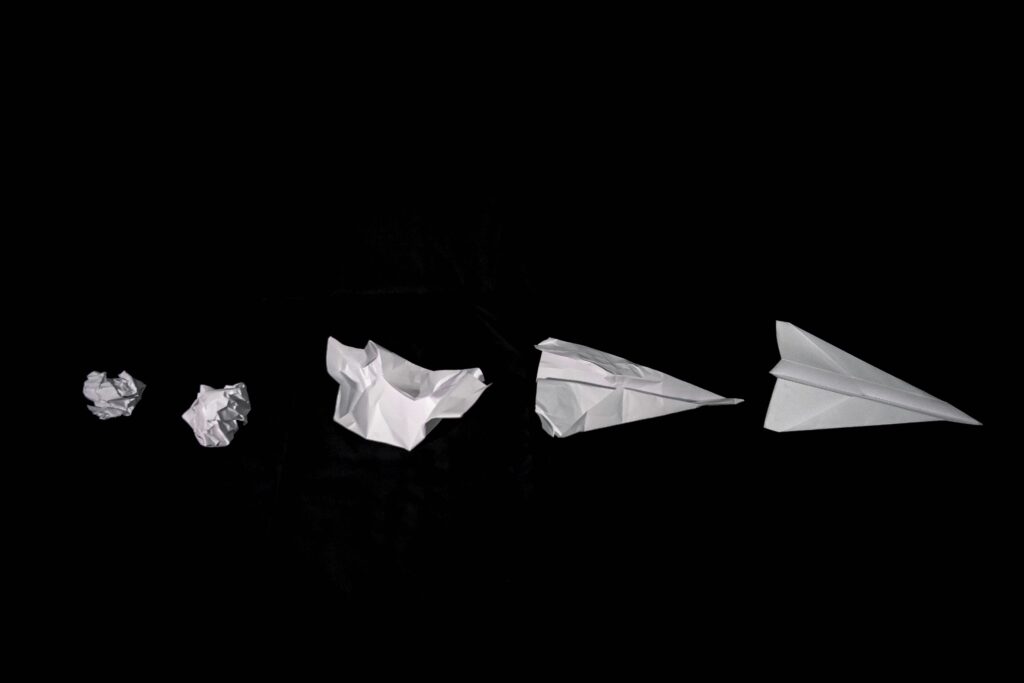

Just as in the game of telephone, where an original message is corrupted as it passes from person to person, so too in science, biases distort each stage of the research process.

Unfortunately, consulting experts and collective knowledge is still the best basis for making many kinds of decisions. When we do consult with experts, however, we should be sure to ask a critical question: “Which other experts disagree with you on this?”. And we should consult those experts, as well.